NPTEL Reinforcement Learning Week 8 Assignment Answers 2024

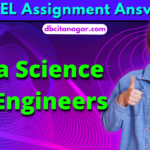

1. Assuming that we use tile-coding state aggregation, which of the following is the correct update equation for replacing traces, for a tile “h” , when its indicator variable is 1?

Answer :- For Answers Click Here

2. Assertion: LSPI can be used for continuous action spaces.

Reason: We can use samples of (state,action) pairs to train a regressor to approximate policy.

- Both Assertion and Reason are true, and Reason is correct explanation for Assertion.

- Both Assertion and Reason are true, but Reason is not correct explanation for assertion.

- Assertion is true and Reason is false

- Both Assertion and Reason are false

Answer :- For Answers Click Here

3. State true or false for the following statement.

Statement: For the LSTD and LSTDQ methods, as we gather more samples, we can incrementally append data to the A~ and b~ matrices. This allows us to stop and solve for the value of θπ at any point in a sampled trajectory.

- True

- False

Answer :-

4. You are given a training set of vectors Φ, so that each row of the matrix Φ corresponds to k attributes of a single training sample. Suppose that you are asked to find a linear function that minimizes the mean squared error for a given set of stationary targets y using linear regression. State true/false for the following statement.

Statement: If the column vectors of Φ are linearly independent, then there exists a unique linear function that minimizes the mean-squared-error.

- True

- False

Answer :-

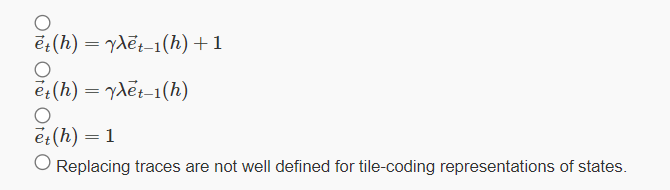

5.

Answer :- For Answers Click Here

6. Which of the following statements are true ?

- Function approximation allows us to deal with continuous state spaces.

- A lookup table is a linear function approximator.

- State aggregates do not overlap in coarse-coding.

- None of the above

Answer :-

7. In which of the following cases, the loss of a function appoximator as ∑s∈S(V^(s)−V(s))2 would lead to poor performance? Consider ’relevant’ states to be those which are visited frequently when executing near optimal policies.

- Large state space with small percentage of relevant states.

- Small state space with large percentage of relevant states.

- Large state space with large percentage of relevant states.

- None of the above

Answer :-

8. Assertion: It is not possible to use look-up table based methods to solve continuous state or action space problems. (Assume discretization of continuous space is not allowed)

Reason: For continuous state or action space, there are an infinite number of states/actions

- Both Assertion and Reason are true, and Reason is correct explanation for Assertion.

- Both Assertion and Reason are true, but Reason is not correct explanation for assertion.

- Assertion is true, Reason is false

- Both Assertion and Reason are false

Answer :-

9. Assertion: If we make incremental updates for a linear approximation of the value function v^ under a policy π, using gradient descent to minimize the mean-square-error between v^(st) and bootstrapped targets Rt+γv^(st+1) then we will eventually converge to the same solution that we would have if we used the true vπ values as targets instead.

Reason: Each update moves v^ closer to vπ, so eventually the bootstrapped targets Rt+γv^(st+1) will converge to the true vπ(st) values

(Assume that we sample on-policy)

- Both Assertion and Reason are true, and Reason is correct explanation for Assertion.

- Both Assertion and Reason are true, but Reason is not correct explanation for assertion.

- Assertion is true and Reason is false

- Both Assertion and Reason are false

Answer :- For Answers Click Here

10. Assertion: To solve the given optimization problem for some states with linear function approximator,

πt+1(s)=argmaxa Q^πt(s,a)in case of discrete action space, we need to formulate a classification problem. Reason: The given problem is equivalent to solving:

πt+1(s)=argmaxaΦ(s)⊤Θ^πt(a)For discrete action space, as we can’t maximize it explicitly, we need to formulate a classification problem.

- Both Assertion and Reason are true, and Reason is correct explanation for Assertion.

- Both Assertion and Reason are true, but Reason is not correct explanation for assertion.

- Assertion is true, Reason is false

- Both Assertion and Reason are false

Answer :-

11. Which of the following is/are true about the LSTD and LSTDQ algorithm?

- Both are iterative algorithms, where the estimate of the parameters are updated using the gradient information of the loss function.

- Both LSTD and LSTDQ can reuse samples.

- Both LSTD and LSTDQ are linear function approximation methods.

- None of the above

Answer :-

12. Consider the following small part of a gridworld. Say blocks with ‘x’ in it are inaccessible, and empty blocks are accessible. And we want to do linear parameterization for states by denoting information of neighbouring states. Say the parameterization for state is as follows. ’0’ shows that the state is inaccessible, and ’1’ shows that it is accessible. For a state’s vector, we define it as at vector of status’ of its neigbours in the order (top, right, bottom, left). For the following sub-gridworld, what will be the state vector for the middle state?

- (1,1,0,0)

- (0,1,1,0)

- (0,1,0,1)

- (1,0,1,0)

Answer :- For Answers Click Here

13. Assertion: When minimizing mean-squared-error to approximate the value of states under a given policy π, it is important that we draw samples on-policy.

Reason: Sampling on-policy makes the training data approximately reflect the steady state distribution of states under the policy π.

- Both Assertion and Reason are true, and Reason is correct explanation for Assertion.

- Both Assertion and Reason are true, but Reason is not correct explanation for assertion.

- Assertion is true and Reason is false

- Both Assertion and Reason are false

Answer :-

14. Tile coding is a method of state aggregation for gridworld problems. Consider the following statements.

(i)The number of indicators for each state is equal to number of tilings.

(ii) Tile coding cannot be used in continuous state spaces.

(iii) Tile coding is also a form of Coarse coding.

Say which of the above statements are true

- (iii) only

- (i), (iii)

- (i) only

- (i), (ii), (iii)

Answer :-

15.

Answer :- For Answers Click Here