NPTEL Introduction to Machine Learning Week 11 Assignment Answers 2024

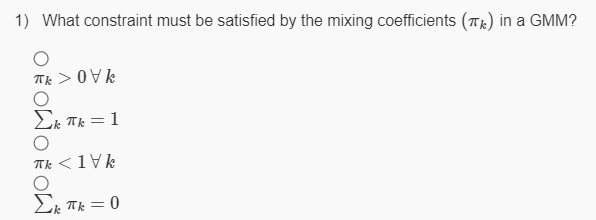

1.

Answer :- For Answers Click Here

2. The EM algorithm is guaranteed to decrease the value of its objective function on any iteration.

- True

- False

Answer :- For Answers Click Here

3. Why might the EM algorithm for GMMs converge to a local maximum rather than the global maximum of the likelihood function?

- The algorithm is not guaranteed to increase the likelihood at each iteration

- The likelihood function is non-convex

- The responsibilities are incorrectly calculated

- The number of components K is too small

Answer :-

4. What does soft clustering mean in GMMs?

- There may be samples that are outside of any cluster boundary.

- The updates during maximum likelihood are taken in small steps, to guarantee convergence.

- It restricts the underlying distribution to be gaussian.

- Samples are assigned probabilities of belonging to a cluster.

Answer :-

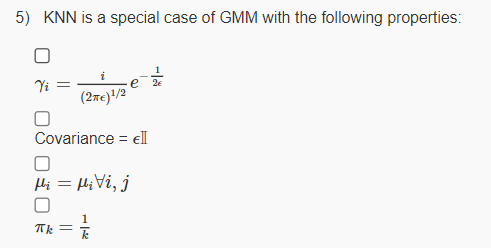

5.

Answer :- For Answers Click Here

6. We apply the Expectation Maximization algorithm to f(D,Z,θ) where D denotes the data, Z denotes the hidden variables and θ the variables we seek to optimize. Which of the following are correct?

- EM will always return the same solution which may not be optimal

- EM will always return the same solution which must be optimal

- The solution depends on the initialization

Answer :-

7. True or False: Iterating between the E-step and M-step of EM algorithms always converges to a local optimum of the likelihood.

- True

- False

Answer :-

8. The number of parameters needed to specify a Gaussian Mixture Model with 4 clusters, data of dimension 5, and diagonal covariances is:

- Lesser than 21

- Between 21 and 30

- Between 31 and 40

- Between 41 and 50

Answer :- For Answers Click Here