NPTEL Deep Learning for Computer Vision Week 8 Assignment Answers 2024

1. Match the following:

- 1 → iii, 2 → iv, 3 → ii, 4 → i

- 1 → iv, 2 → i, 3 → iii, 4 → ii

- 1 → i, 2 → ii, 3 → iii, 4 → iv

- 1 → i, 2 → iii, 3 → ii, 4 → iv

Answer :- For Answers Click Here

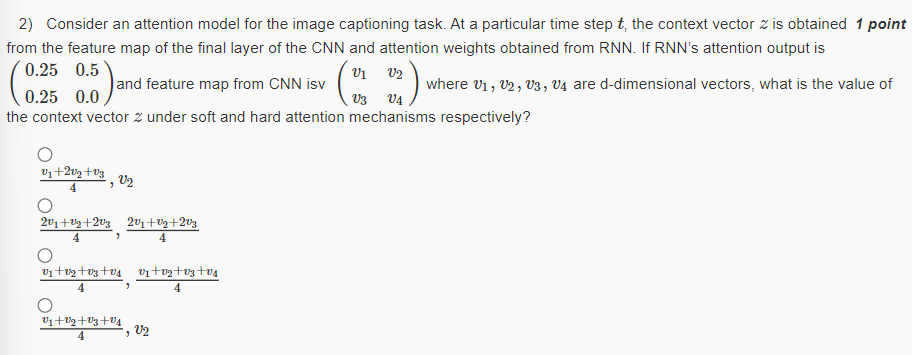

2.

Answer :- For Answers Click Here

3. Which of the following statements are true? (Select all options that apply)

- The number of learnable parameters in an RNN grows exponentially with input sequence length considered.

- An image classification task with a batch size of 16 is a sequential learning problem.

- In an RNN, the current hidden state ht not only depends on the previous hidden state ht−1 but implicitly depends on earlier hidden states also.

- Generating cricket commentary for a corresponding video snippet is a sequence learning problem.

Answer :- For Answers Click Here

4. Which one of the following statements is true?

- Attention mechanisms cannot be applied to the bidirectional RNN model

- An image captioning network cannot be trained end-to-end even though we are using 2 different modalities to train the network

- One of the key components in the vanilla transformer are the recurrent connections that help them to deal with variable input length.

- All of the above

- None of the above

Answer :-

5. Match the following ways of boosting image captioning techniques with attributes. Here, I =Image; A = Image Attributes; f(.) is the function applied on them.

- 1→iii, 2→ii, 3→v, 4→iv,5→i

- 1→iii, 2→iv, 3→i, 4→ii,5→v

- 1→iii, 2→iv, 3→v, 4→ii,5→i

- 1→i, 2→iii, 3→iv, 4→ii,5→v

Answer :-

6. Which of the following statements are true? (Select all possible correct options)

- Autoencoder can be equivalent to Principal Component Analysis (PCA) provided we make use of non-linear activation functions

- When using global attention on temporal data, alignment weights are learnt for encoder hidden representations for all time steps

- Positional encoding is an important component of the transformer architecture as it conveys information about order in a given sequence

- It is not possible to generate different captions for the same image that have similar meaning but different tone/style

- Autoencoders can not be used for data compression as its input and output dimensions are different

Answer :- For Answers Click Here

7. Which of the following is true regarding Hard Attention and Soft Attention?

- Hard Attention is smooth and differentiable

- Variance reduction techniques are used to train Hard Attention models

- Soft Attention is computationally cheaper than Hard Attention when the source input is large

- All of the above

- None of the above

Answer :-

8. Match the following attention mechanisms to their corresponding alignment score functions:

- 1→iii, 2→ii, 3→v, 4→iv,5→i

- 1→iii, 2→iv, 3→i, 4→ii,5→v

- 1→i, 2→iii, 3→iv, 4→ii,5→v

- 1→v, 2→iv, 3→iii, 4→i,5→ii

Answer :-

9. Which of the following statements is true (select all that apply):

- The number of learnable parameters in an RNN grows exponentially with input sequence length considered

- Long sentences give rise to the vanishing gradient problem

- Electrocardiogram signal classification is a sequence learning problem

- RNNs can have more than one hidden layer

Answer :- For Answers Click Here

The RNN given below is used for classification:

The dimensions of the layers of RNN are as follows:

• Input X∈R132

• Hidden Layer 1 D1 ∈R256

• Hidden Layer 2 D2 ∈R128

• Number of classes:15Note: Do not consider the bias term

10. Number of weights in Weight Matrix U1 is

Answer :-

11. Number of weights in Weight Matrix V1 is

Answer :-

12. Number of weights in Weight Matrix U2 is

Answer :- For Answers Click Here

13. Number of weights in Weight Matrix V2 is

Answer :-

14. Number of weights in Weight Matrix W is

Answer :-

Consider an LSTM cell, and the data given below:

xt=3

ht−1=2

Wf =[0.1,0.2]

bf =0

Wi =[-0.1,-0.3]

bi =-1

Wo =[-1.2,-0.2]

bo =1.5

WC =[3,-1]

bC =0.5

Ct−1 =-0.5

Compute the following quantities (round upto 3 decimal places, refer formulas from lecture slides for computation). Note that we use scalars and vectors for ease of calculation here; in a realistic setup, this will be matrices and not scalars.

15. Forget Gate ft

Answer :-

16. Input Gate it

Answer :- For Answers Click Here

17. Output Gate it

Answer :-

18. New cell content Ct

Answer :-

19. Cell State Ct

Answer :-

20. Hidden state ht

Answer :-

Consider a GRU cell, and the following data:

- xt =1.5

- ht−1 =-0.5

- Wz =[1.1,1.2]

- Wr =[-1.1,-1.3]

- W=[-1,-0.5]

Compute the following quantities (round upto 3 decimal places, bias values can be considered zero, refer formulas from lecture slides for computation). Note that we use scalars and vectors for ease of calculation here; in a realistic setup, this will be matrices and not scalars.

21. Update Gate zt

Answer :- For Answers Click Here

22. Reset Gate rt

Answer :-

23. New hidden state content ht

Answer :-

24. hidden state ht

Answer :- For Answers Click Here