NPTEL Deep Learning – IIT Ropar Week 9 Assignment Answers 2024

1. Consider the following corpus: “human machine interface for computer applications. user opinion of computer system response time. user interface management system. system engineering for improved response time”. What is the size of the vocabulary of the above corpus?

- 13

- 14

- 15

- 16

Answer :- For Answers Click Here

2. At the input layer of a continuous bag of words model, we multiply a one-hot vector x∈R|V|

with the parameter matrix W∈Rk×|V|. What does each column of W correspond to?

- the representation of the i-th word in the vocabulary

- the i-th eigen vector of the co-occurrence matrix

Answer :- For Answers Click Here

3. Suppose that we use the continuous bag of words (CBOW) model to find vector representations of words. Suppose further that we use a context window of size 3 (that is, given the 3 context words, predict the target word P(wt|(wi,wj,wk))). The size of word vectors (vector representation of words) is chosen to be 100 and the vocabulary contains 10,000 words. The input to the network is the one-hot encoding (also called 1-of-V encoding) of word(s). How many parameters (weights), excluding bias, are there in Wword

? Enter the answer in thousands. For example, if your answer is 50,000, then just enter 50.

Answer :- For Answers Click Here

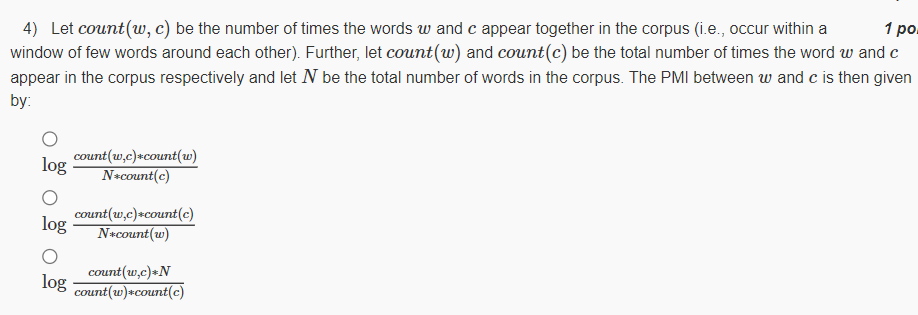

4.

Answer :-

5. Consider a skip-gram model trained using hierarchical softmax for analyzing scientific literature. We observe that the word embeddings for ‘Neuron’ and ‘Brain’ are highly similar. Similarly, the embeddings for ‘Synapse’ and ‘Brain’ also show high similarity. Which of the following statements can be inferred?

- ‘Neuron’ and ‘Brain’ frequently appear in similar contexts

- The model’s learned representations will indicate a high similarity between ‘Neuron’ and ‘Synapse’

- The model’s learned representations will not show a high similarity between ‘Neuron’ and ‘Synapse’

- According to the model’s learned representations, ‘Neuron’ and ‘Brain’ have a low cosine similarity

Answer :-

6. Which of the following is an advantage of the CBOW model compared to the Skip-gram model?

- It is faster to train

- It requires less memory

- It performs better on rare words

- All of the above

Answer :- For Answers Click Here

7. Which of the following is true about the input representation in the CBOW model?

- Each word is represented as a one-hot vector

- Each word is represented as a continuous vector

- Each word is represented as a sequence of one-hot vectors

- Each word is represented as a sequence of continuous vectors

Answer :-

8. Which of the following is an advantage of using the skip-gram method over the bag-of-words approach?

- The skip-gram method is faster to train

- The skip-gram method performs better on rare words

- The bag-of-words approach is more accurate

- The bag-of-words approach is better for short texts

Answer :-

9. What is the role of the softmax function in the skip-gram method?

- To calculate the dot product between the target word and the context words

- To transform the dot product into a probability distribution

- To calculate the distance between the target word and the context words

- To adjust the weights of the neural network during training

Answer :-

10. How does Hierarchical Softmax reduce the computational complexity of computing the softmax function?

- It replaces the softmax function with a linear function

- It uses a binary tree to approximate the softmax function

- It uses a heuristic to compute the softmax function faster

- It does not reduce the computational complexity of computing the softmax function

Answer :- For Answers Click Here