NPTEL Introduction To Machine Learning – IITKGP Week 1 Assignment Answers 2024 (July-October)

NPTEL Introduction To Machine Learning – IITKGP Week 1 Assignment Answers 2024 (July-October)

1. Which of the following is a classification task?

A. Detect pneumonia from chest X-ray image

B. Predict the price of a house based on floor area, number of rooms etc.

C. Predict the temperature for the next day

D. Predict the amount of rainfall

Answer :- a

2. Which of the following is not a type of supervised learning?

A. Classification

B. Regression

C. Clustering

D. None of the above

Answer :- For Answer Click Here

3. Which of the following tasks is NOT a suitable machine learning task?

A. Finding the shortest path between a pair of nodes in a graph

B. Predicting if a stock price will rise or fall

C. Predicting the price of petroleum

D. Grouping mails as spams or non-spams

Answer :-

4. Suppose I have 10,000 emails in my mailbox out of which 300 are spams. The spam detection system detects 150 mails as spams, out of which 50 are actually spams. What is the precisior and recall of my spam detection system?

A. Precision = 33.33%, Recall = 25%

B. Precision = 25%, Recall = 33.33%

C. Precision = 33.33%, Recall = 16.66%

D. Precision = 75%, Recall = 33.33%

Answer :- For Answer Click Here

5. Which of the following is/are supervised learning problems?

A. Predicting disease from blood samples.

B. Grouping students in the same class based on similar features.

C. Face recognition to unlock your phone.

Answer :-

6. Aliens challenge you to a complex game that no human has seen before. They give you

time to learn the game and develop strategies before the final showdown. You choose to

use machine learning because an intelligent machine is your only hope. Which machine

learning paradigm should you choose for this?

A. Supervised learning

B. Unsupervised learning

C. Reinforcement learning

D. Use a random number generator and hope for the best

Answer :-

7. How many Boolean functions are possible with N features?

A. (22N)

в. (2N)

c. (N2)

D. (4N)

Answer :- For Answer Click Here

8. What is the use of Validation dataset in Machine Learning?

A. To train the machine learning model.

B. To evaluate the performance of the machine learning model

C. To tune the hyperparameters of the machine learning model

D. None of the above.

Answer :-

9. Regarding bias and variance, which of the following statements are true? (Here ‘high’ and

‘low’ are relative to the ideal model.)

A. Models which overfit have a high bias.

B. Models which overfit have a low bias.

C. Models which underfit have a high variance.

D. Models which underfit have a low variance.

Answer :-

10. Which of the following is a categorical feature?

A. Height of a person

B. Price of petroleum

C. Mother tongue of a person

D. Amount of rainfall in a day

Answer :- For Answer Click Here

NPTEL Introduction To Machine Learning – IITKGP Week 3 Assignment Answer 2023

Q1. Fill in the blanks:

K-Nearest Neighbor is a

a. Non-parametric, eager

b. Parametric, eager

c. Non-parametric, lazy

d. Parametric, lazy algorithm

Answer :- c

2. You have been given the following 2 statements. Find out which of these options is/are true in the case of k-NN.

(i) In case of very large value of k, we may include points from other classes into the neighborhood.

(ii) In case of too small value of k, the algorithm is very sensitive to noise.

a. (i) is True and (ii) is False

b. (i) is False and (ii) is True

c. Both are True

d. Both are False

Answer :- c

3. State whether the statement is True/False: k-NN algorithm does more computation on test time rather than train time.

a. True

b. False

Answer :- For Answer Click Here

4. Suppose you are given the following images (1 represents the left image, 2 represents the middle and 3 represents the right). Now your task is to find out the value of k in k-NN in each of the images shown below. Here k1 is for 15, k2 is for 2nd and k3 is for 3rd figure.

a. k1 > k2> k3

b. k1 < k2> k3

c. k1 < k2 < k3

d. None of these

Answer :-

5. Which of the following necessitates feature reduction in machine learning?

a. Irrelevant and redundant features

b. Limited training data

c. Limited computational resources.

d. All of the above

Answer :-

6. Suppose, you have given the following data where x and y are the 2 input variables and Class is the dependent variable.

Answer :- For Answer Click Here

7. What is the optimum number of principal components in the below figure?

a. 10

b. 20

c. 30

d. 40

Answer :-

8. Suppose we are using dimensionality reduction as pre-processing technique, i.e, instead of using all the features, we reduce the data to k dimensions with PCA. And then use these PCA projections as our features. Which of the following statements is correct? Choose which of the options is correct?

a. Higher value of ‘k’ means more regularization

b. Higher value of ‘K‘ means less regularization

Answer :-

9. In collaborative filtering-based recommendation, the items are recommended based on :

a. Similar users

b. Similar items

c. Both of the above

d. None of the above

Answer :-

10. The major limitation of collaborative filtering is:

a. Cold start

b. Overspecialization

c. None of the above

Answer :- For Answer Click Here

11. Consider the figures below. Which figure shows the most probable PC component directions for the data points?

Answer :-

12. Suppose that you wish to reduce the number of dimensions of a given data to dimensions using PCA. Which of the following statement is correct?

a. Higher means more regularization

b. Higher means less regularization

c. Can’t Say

Answer :-

13. Suppose you are given 7 plots 1-7 (left to right) and you want to compare Pearson correlation coefficients between variables of each plot. Which of the following is true?

Answer :-

14. Imagine you are dealing with 20 class classification problem. What is the maximum number of discriminant vectors that can be produced by LDA?

a. 20

b. 19

c. 21

d. 10

Answer :-

15. In which of the following situations collaborative filtering algorithm is appropriate?

a. You manage an online bookstore and you have the book ratings from many users. For each user, you want to recommend other books he/she will like based on her previous ratings and other users’ ratings.

b. You manage an online bookstore and you have the book ratings from many users. You want to predict the expected sales volume (No of books sold) as a function of average rating of a book.

c. Both A and B

d. None of the above

Answer :-

NPTEL Introduction To Machine Learning – IITKGP Week 2 Assignment Answer 2023

1. What is Entropy (Emotion Wig = Y)?

a. 1

b. 0

c. 0.50

d. 0.20

Answer :- a

2. What is Entropy (Emotion\ Ears = 3)?

a. 1

b. 0

c. 0.50

d. 0.20

Answer :- b

3. Which attribute should you choose as root of the decision tree?

a. Color

b. Wig

c. Number of ears

d. Any one of the previous three attributes

[ihc-hide-content ihc_mb_type=”show” ihc_mb_who=”1,2,3″ ihc_mb_template=”1″ ]

Answer :- a

4. In linear regression, the output is:

a. Discrete

b. Categorical

c. Continuous

d. May be discrete or continuous

Answer :- c

5. Consider applying linear regression with the hypothesis as he(x) = 0o + Ox. The training

data is given in the table.

where m is the number of traming examples. N(* is the value of linear regression hypothesis at point, i. If 0 = [1, 1]. find J

a. 0

b. 1

c. 2

d. 0.5

Answer :- b

6. Specify whether the following statement is true or false? “The ID3 algorithm is guaranteed to find the optimal decision tree”

a. True

b. False

Answer :- b

7. Identify whether the following statement is true or false? “A classifier trained on less training data is less likely to overfît”

a. True

b. False

Answer :- b

8. Identify whether the following statement is true or false? “Overfîtting is more likely when the hypothesis space is small”

a. True

b. False

Answer :- b

9. Traditionally, when we have a real-valued input attribute during decision-tree learning, we consider a binary split according to whether the attribute is above or below some threshold. One of your friends suggests that instead we should just have a multiway split with one branch for each of the distinet values of the attribute. From the list below choose the single biggest problem with your friend’s suggestion:

a. It is too computationally expensive

b. It would probably result in a decision tree that scores badly on the training set and a test set

c. It would probably result in a decision tree that scores well on the training set but badly on a test set

d. would probably result in a decision tree that scores well on a test set but badly on a tramning set

Answer :- c

10. Which of the following statements about decision trees is/are true?

a. Decision trees can handle both categorical and numerical data.

b. Decision trees are resistant to overfitting.

c. Decision trees are not interpretable.

d. Decision trees are only suitable for binary classification problems.

Answer :- a

11. Which of the following techniques can be used to handle overfitting in decision trees?

a. Pruning

b. Increasing the tree depth

c. Decreasing the minimum number of samples required to split a node

d. Adding more features to the dataset

Answer :- a, c

12. Which of the following is a measure used for selecting the best split in decision trees?

a. Gini Index

b. Support Vector Machine

c. K-Means Clustering

d. Naive Bayes

Answer :- a

13. What is the purpose of the decision tree’s root node in machine learning?

a. It represents the class labels of the training data.

b. It serves as the starting point for tree traversal during prediction.

c. It contains the feature values of the training data.

d. It determines the stopping criterion for tree construction.

Answer :- b

14. Which of the following statements about linear regression is true?

a. Linear regression is a supervised learning algorithm used for both regression and classification tasks.

b. Linear regression assumes a linear relationship between the independent and dependent variables.

c. Linear regression is not affected by outliers in the data.

d. Linear regression can handle missing values in the dataset.

Answer :- b

15. Which of the following techniques can be used to mitigate overfitting in machine learning?

a. Regularization

b. Increasing the model complexity

c. Gathering more training data

d. Feature selection or dimensionality reduction

Answer :- a, c. d

NPTEL Introduction To Machine Learning – IITKGP Week 1 Assignment Answer 2023

1. Which of the following is/are classification tasks?

a. Find the gender of a person by analyzing his writing style

b. Predict the price of a house based on floor area. number of rooms. etc.

C. Predict whether there will be abnormally heavy rainfall next year

d. Predict the number of conies of a book that will be sold this month

Answer :- a. Find the gender of a person by analyzing his writing style c. Predict whether there will be abnormally heavy rainfall next year Explanation: a. Finding the gender of a person based on writing style involves classifying the person into one of two classes - male or female. c. Predicting whether there will be abnormally heavy rainfall next year involves classifying the occurrence of heavy rainfall as either "abnormally heavy rainfall" or "not abnormally heavy rainfall." This can be treated as a binary classification problem.

2. A feature F1 can take certain values: A, B, C, D, E, F, and represents the grade of students from a college. Which of the following statement is true in the following case?

a. Feature F1 is an example of a nominal variable.

b. Feature F1 is an example of an ordinal variable.

c. It doesn’t belong to any of the above categories.

d. Both of these

Answer :- b. Feature F1 is an example of an ordinal variable. Explanation: In statistics, variables can be classified into different types, and two common types are nominal and ordinal variables. Nominal variables are categorical variables with no inherent order. The categories in a nominal variable cannot be ranked or ordered in any meaningful way. Examples of nominal variables are eye color, country names, or the types of fruits. Ordinal variables, on the other hand, have categories with a natural order or ranking. While the exact differences between the categories may not be well-defined, there is a relative ordering among them. Examples of ordinal variables are educational levels (e.g., high school, undergraduate, graduate) or ratings like "good," "better," and "best." In this case, the feature F1 represents the grades of students from a college, and these grades likely have an inherent order such as A being better than B, and so on. Therefore, F1 is an example of an ordinal variable.

3. Suppose I have 10,000 emails in my mailbox out of which 200 are spams. The spam detection system detects 150 emails as spams, out of which 50 are actually spam. What is the precision and recall of my spam detection system?

a. Precision = 33.333%. Recall = 25%

b. Precision = 25%, Recall = 33.33%

c. Precision = 33.33%, Recall = 75%

d. Precision = 75%, Recall = 33.33%

Answer :- a. Precision = 33.33%. Recall = 25%

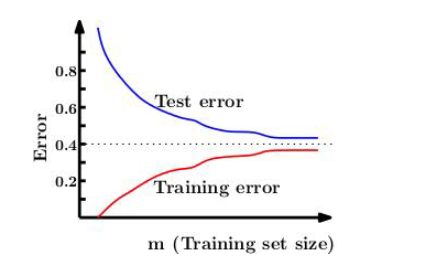

4. Which of the following statements describes what is most likely TRUE when the amount of training data increases?

a. Training error usually decreases and generalization error usually increases.

b. Training error usually decreases and generalization error usually decreases.

C. Training error usually increases and generalization error usually decreases.

d. Training error usually increases and generalization error usually increases.

Answer :- a

5. You trained a leaming algorithm, and plot the learning curve. The following figure is obtained.

The algorithm is suffering from

a. High bias

b. High variance

c. Neither

Answer :- a

6. I am the marketing consultant of a leading e-commerce website. I have been given a task of making a system that recommends products to users based on their activity on Facebook. I realize that user interests could be highly variable. Hence, I decide to

T1) Cluster the users into communities of like-minded people and

T2) Train separate models for each community to predict which product category (e.g., electronic gadgets. cosmetics. etc.) would be the most relevant to that community.

The task T1 is a/an _________ learning problem and T2 is a/an ________problem.

Choose from the options:

a. Supervised and unsupervised

b. Unsupervised and supervised

c. Supervised and supervised

d. Unsupervised and unsupervised learning problem and I2 is a/an

Answer :- b

7. Select the correct equations.

TP – True Positive, IN – True Negative, FP – False Positive, FN – False Negative

i. Precision = Tp/Tp+Fp

ii Recall = FP/Ty+Fp

ili. Recall = Tp/To+Fn

iv. Accuracy=: Tp+Fn/Tp+Fp+Tn+Fn

a. i, iii. IV

b. i and iii

c. 11 and iv

d. i. ii, iii. iv

Answer :- a. i, iii. IV

8. Which of the following tasks is NOT a suitable machine learning task(s)?

a. Finding the shortest path between a pair of nodes in a graph

b. Predicting if a stock price will rise or fall

c. Predicting the price of petroleum

d. Grouping mails as spams or non-spams

Answer :- a. Finding the shortest path between a pair of nodes in a graph. Explanation: Machine learning is not typically used for finding the shortest path between nodes in a graph. This problem can be efficiently solved using algorithms like Dijkstra's algorithm or A* search algorithm, which are specifically designed for this purpose and do not involve learning from data.

9. Which of the following is/are associated with overfitting in machine learning?

a. High bias

b. Low bias

c. Low variance

d. High variance

e. Good performance on training data

f. Poor performance on test data

Answer :- b. Low bias d. High variance e. Good performance on training data f. Poor performance on test data Explanation: High variance (option d) refers to a model that is too complex and captures noise or random fluctuations in the training data. As a result, it performs well on the training data but poorly on unseen test data, indicating overfitting. Good performance on training data (option e) is a common characteristic of overfitting. The overfitted model fits the training data closely, leading to high accuracy or low error on the training set. Poor performance on test data (option f) is another sign of overfitting. The overfitted model does not generalize well to new, unseen data, resulting in lower accuracy or higher error on the test set compared to the training set.

10. Which of the following statements about cross-validation in machine learning is/are true?

a. Cross-validation is used to evaluate a model’s performance on the training data.

b. Cross-validation guarantees that a model will generalize well to unseen data.

c. Cross-validation is only applicable to classification problems and not regression problems.

d. Cross-validation helps in estimating the model’s performance on unseen data by simulating the test phase.

Answer :- d

11. What does k-fold cross-validation involve in machine learning?

a. Splitting the dataset into k equal-sized training and test sets.

b. Splitting the dataset into k unequal-sized training and test sets.

c. Partitioning the dataset into k subsets, and iteratively using each subset as a validation set while the remaining k-1 subsets are used for training.

d. Dividing the dataset into k subsets, where each subset represents a unique class label for classification tasks.

Answer :- c

12. What does the term “feature space” refer to in machine learning?

a. The space where the machine learning model is trained.

b. The space where the machine learning model is deployed.

c. The space which is formed by the input variables used in a machine leaming model.

d. The space where the output predictions are made by a machine learning model.

Answer :- c. The space which is formed by the input variables used in a machine learning model. Explanation: In machine learning, the term "feature space" refers to the space formed by the input variables (features) used to train a machine learning model. Each data point in the dataset represents a point in this feature space, where the coordinates are the values of the input features. For example, if you have a dataset with two features, such as "age" and "income," then the feature space would be a two-dimensional space where each data point is represented by a pair of values (age, income). Machine learning algorithms work by trying to find patterns and relationships in this feature space that can be used to make predictions or classifications. The goal is to find a decision boundary or decision surface that separates different classes or groups based on their feature values.

13. Which of the following statements is/are true regarding supervised and unsupervised learning?

a. Supervised learning can handle both labeled and unlabeled data.

b. Unsupervised learning requires human experts to label the data.

c. Supervised learning can be used for regression and classification tasks.

d. Unsupervised learning aims to find hidden patterns in the data.

Answer :- c. Supervised learning can be used for regression and classification tasks. d. Unsupervised learning aims to find hidden patterns in the data.

14. One of the ways to mitigate overfitting is

a. By increasing the model complexity

b. By reducing the amount of training data

c. By adding more features to the model

d. By decreasing the model complexity

Answer :- d. By decreasing the model complexity

15. How many Boolean functions are possible with N features?

a. (22N)

b. (2N)

C. (N2)

d. (4N)

Answer :- a (22N) (Not Sure)

![[Week 1] NPTEL Public Speaking Assignment Answers 2024 8 NPTEL Public Speaking Circuits Assignment Answers 2023](https://dbcitanagar.com/wp-content/uploads/Nptel-Public-Speaking-Answers-150x150.png)