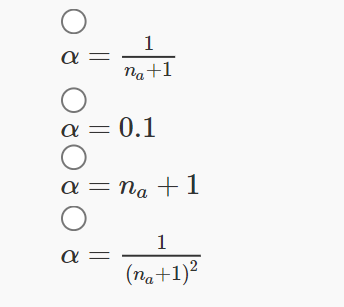

1. In the update rule Qt+1(a)←Qt(a)+α(Rt−Qt(a)), select the value of α that we would prefer to estimate Q values in a non-stationary bandit problem.

Answer :- For Answer Click Here

2. The “Credit assignment problem” is the issue of assigning a correct mapping of rewards accumulated by the action(s). Which of the following is/are the reason for credit assignment problem in RL? (Select all that apply)

- Reward for an action may only be observed after many time steps.

- An agent may get the same reward for multiple actions.

- The agent discounts rewards that occurred in previous time steps.

- Rewards can be positive or negative

Answer :- For Answer Click Here

3. Assertion1: In stationary bandit problems, we can achieve asymptotically correct behaviour by selecting exploratory actions with a fixed non-zero probability without decaying exploration.

Assertion2: In non-stationary bandit problems, it is important that we decay the proba- bility of exploration to zero over time in order to achieve asymptotically correct behavior.

- Assertion1 and Assertion2 are both True.

- Assertion1 is True and Assertion2 is False.

- Assertion1 is False and Assertion2 is True.

- Assertion1 and Assertion2 are both False.

Answer :-

4. We are trying different algorithms to find the optimal arm for an multi arm bandit. The expected payoff for each algorithm corresponds to some function with respect to time t (time staring from 0). Given that the optimal expected pay off is 1, which among the following functions corresponds to the algorithm with the least Regret? (Hint: Plot the functions)

- tanh(t/5)

- 1−2−t

- x/20 if x<20 and 1 after that

- Same regret for all the above functions.

Answer :- For Answer Click Here

5. Which of the following is/are correct and valid reasons to consider sampling actions from a softmax distribution instead of using an ε-greedy approach?

i Softmax exploration makes the probability of picking an action proportional to the action- value estimates. By doing so, it avoids wasting time exploring obviously ’bad’ actions.

ii We do not need to worry about decaying exploration slowly like we do in the ε-greedy case. Softmax exploration gives us asymptotic correctness even for a sharp decrease in temperature.

iii It helps us differentiate between actions with action-value estimates (Q values) that are very close to the action with maximum Q value.

Which of the above statements is/are correct?

- i, ii, iii

- only iii

- only i

- i, ii

Answer :- For Answer Click Here

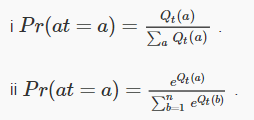

Answer :-

7. What are the properties of a solution method that is PAC Optimal?

- (a) It is guaranteed to find the correct solution.

- (b) It minimizes sample complexity to make the PAC guarantee.

- (c) It always reaches optimal behaviour faster than an algorithm that is simply asymptotically correct.

- Both (a) and (b)

- Both (b) and (c)

- Both (a) and (c)

Answer :-

8. Consider the following statements

i The agent must receive a reward for every action taken in order to learn an optimal policy.

ii Reinforcement Learning is neither supervised nor unsupervised learning.

iii Two reinforcement learning agents cannot learn by playing against each other.

iv Always selecting the action with maximum reward will automatically maximize the prob- ability of winning a game.

Which of the above statements is/are correct?

- i, ii, iii

- only ii

- ii, iii

- iii, iv

Answer :-

9. Assertion: Taking exploratory actions is important for RL agents

Reason: If the rewards obtained for actions are stochastic, an action which gave a high reward once, might give lower reward next time.

- Assertion and Reason are both true and Reason is a correct explanation of Assertion

- Assertion and Reason are both true and Reason is not a correct explanation of Assertion

- Assertion is true and Reason is false

- Both Assertion and Reason are false

Answer :-

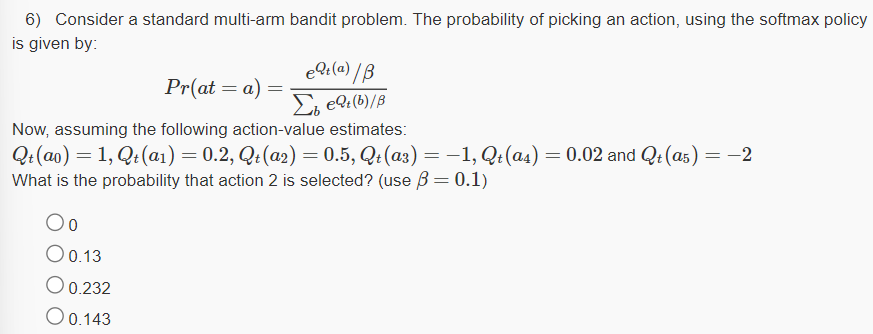

10. Following are two ways for defining the probability of selecting an action/arm in softmax policy. Which among the following is a better choice and why?

- (i) is better choice as it requires less complex computation

- (ii) is better choice as it can also deal with negative values of Qt(a)

- Both are good as both formulas can bound probability in range 0 to 1.

- (i) is better because it can differentiate well between close values of Qt(a).

Answer :-

![[Week 1] NPTEL Computer Graphics Assignment Answers 2024 6 [Week 3] NPTEL Computer Graphics Assignment Answers 2023](https://dbcitanagar.com/wp-content/uploads/Computer-Graphics-150x150.png)