NPTEL Reinforcement Learning Week 9 Assignment Answers 2024

1. Which of the following is true about DQN?

- It can be efficiently used for very large state spaces

- It can be efficiently used for continuous action spaces

Answer :- For Answers Click Here

2. How many outputs will we get from the final layer of a DQN Network (|S| and |A| represent the total number of states and actions in the environment respectively)?

- |S| × |A|

- |S|

- |A|

- None of these

Answer :- For Answers Click Here

3. What are the reasons behind using an experience replay buffer in DQN?

- Random sampling from experience replay buffer breaks correlations among transitions.

- It leads to efficient usage of real-world samples.

- It guarantees convergence to the optimal policy.

- None of the above

Answer :- For Answers Click Here

4. Statement: DQN is implemented with current and target network.

Reason: Using target network helps in avoiding chasing a non-stationary target.

- Both Assertion and Reason are true, and Reason is correct explanation for Assertion.

- Both Assertion and Reason are true, but Reason is not correct explanation for assertion.

- Assertion is true, Reason is false

- Both Assertion and Reason are false

Answer :-

5. Assertion: Actor-critic updates have lesser variance than REINFORCE updates.

Reason: Actor-critic methods use TD target instead of Gt.

- Both Assertion and Reason are true, and Reason is correct explanation for Assertion.

- Both Assertion and Reason are true, but Reason is not correct explanation for assertion.

- Assertion is true, Reason is false

- Both Assertion and Reason are false

Answer :-

6. Suppose we are using a policy gradient method to solve a reinforcement learning problem. Assuming that the policy returned by the method is not optimal, which among the following are plausible reasons for such an outcome?

- The search procedure converged to a locally optimal policy.

- The search procedure was terminated before it could reach an optimal policy.

- An optimal policy could not be represented by the parameterisation used to represent the policy.

- None of these

Answer :- For Answers Click Here

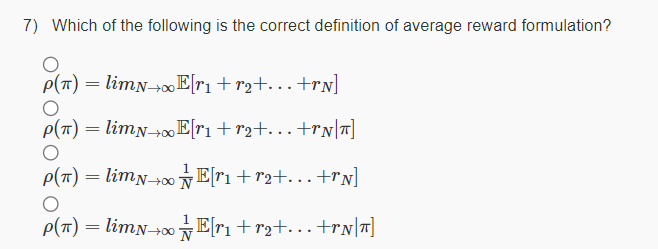

7.

Answer :-

8. State True or False:

Monte Carlo policy gradient methods typically converge faster than the actor-critic methods, given that we use similar parameterisations and that the approximation to the Qπ used in the actor-critic method satisfies the compatibility criteria.

- True

- False

Answer :-

9. When using policy gradient methods, if we make use of the average reward formulation rather than the discounted reward formulation, then is it necessary to assign a designated start state, s0?

- Yes

- No

- Can’t say

Answer :-

10. State True or False:

Exploration techniques like softmax (or other equivalent techniques) are not needed for DQN as the randomisation provided by experience replay provides sufficient exploration.

- True

- False

Answer :- For Answers Click Here